To perform k-fold cross-validation for hyperparameter optimization in XGBoost, use XGBClassifier or XGBRegressor with GridSearchCV or RandomizedSearchCV from sklearn.model_selection, ensuring optimal parameters are found via cross-validation.

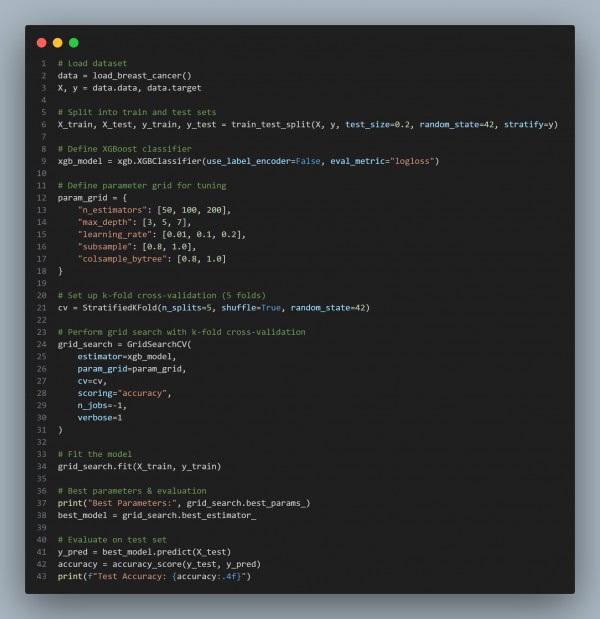

Here is the code snippet given below:

In the above code we are using the following techniques:

-

Uses GridSearchCV for Exhaustive Hyperparameter Search:

- Tunes n_estimators, max_depth, learning_rate, subsample, colsample_bytree.

-

Implements StratifiedKFold for Balanced Class Distribution:

- Ensures each fold maintains class proportions, avoiding bias.

-

Automatically Selects Best Model (grid_search.best_estimator_):

- Uses 5-fold CV to find the best hyperparameter combination.

Hence, using k-fold cross-validation in GridSearchCV ensures optimal hyperparameter selection in XGBoost while preventing overfitting, leading to better generalization.

Generative AI creates text, images, and more by learning from data patterns. A Generative AI certification helps professionals develop skills in training AI models for automation, creativity, and real-world applications in various industries.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP