To prevent a Variational Autoencoder (VAE) from generating overly simplistic outputs, use a higher-capacity latent space, apply a stronger decoder, fine-tune the β-VAE loss (KL weight), and enhance training diversity with richer datasets.

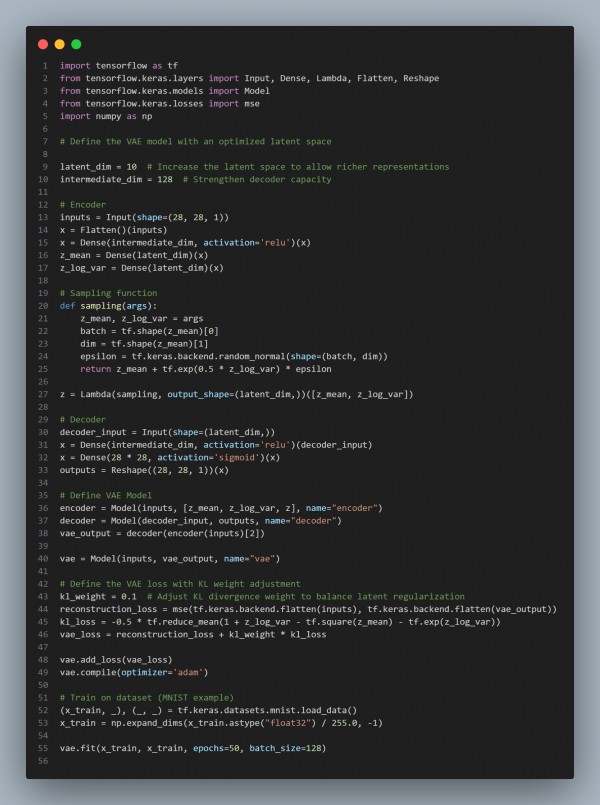

Here is the code snippet you can refer to:

In the above code we are using the following key approaches:

-

Increased Latent Space (latent_dim=10)

- Allows the model to encode more meaningful variations.

-

Enhanced Decoder (intermediate_dim=128)

- Strengthens generative capacity, preventing blurry or simplistic outputs.

-

Adjusted KL Loss Weight (kl_weight=0.1)

- Balances regularization and reconstruction, avoiding excessive constraint on the latent space.

-

Training with Normalized Data (x_train / 255.0)

- Ensures stable optimization and better reconstruction fidelity.

Hence, by increasing latent space, strengthening the decoder, adjusting KL weight, and using well-preprocessed data, VAE can generate richer and more complex outputs while maintaining meaningful structure.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP