To address missing context due to an outdated tokenizer, update to a modern subword-based tokenizer like SentencePiece, BPE, or WordPiece and retrain tokenized datasets accordingly.

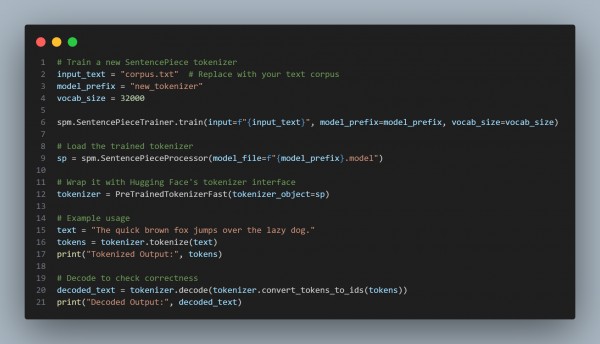

Here is the code snippet you can refer to:

In the above, we are using the following key points::

- SentencePiece Training: Uses a modern tokenizer to handle subword segmentation effectively.

- Vocab Update: Trains a new tokenizer with a vocabulary size of 32,000 for better generalization.

- Hugging Face Compatibility: Wraps the trained tokenizer for easy integration with transformers.

- Tokenization & Decoding: Ensures correct tokenization and reversibility of generated text.

- Improved Context Retention: Reduces missing context issues caused by outdated tokenization methods.

Hence, by upgrading to a modern subword-based tokenizer like SentencePiece and integrating it with the model, we enhance context retention and improve the quality of generated language outputs.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP