Autoregressive transformers generate text sequentially, making them slower but more suitable for tasks requiring fine-grained control, while bidirectional transformers process the entire sequence at once, enhancing context understanding but generally being less suited for sequence generation.

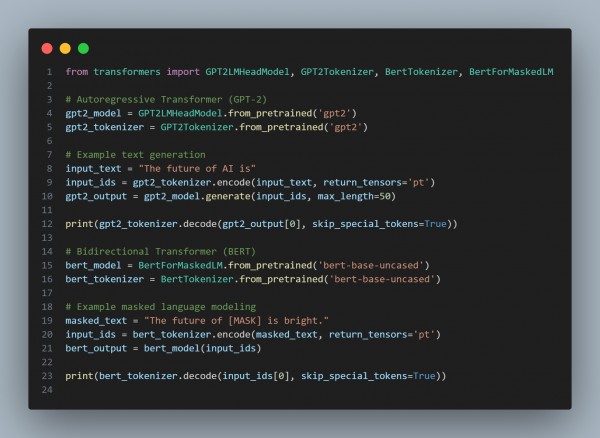

Here is the code snippet showing how It is done:

In the above code, we are using the following key points:

- Autoregressive (GPT-2): Generates text one token at a time, making it suitable for tasks like text generation.

- Bidirectional (BERT): Processes entire sequences to understand context but doesn't directly generate text, focusing on tasks like masked language modeling.

- Task Suitability: Autoregressive models excel in a generation, while bidirectional models excel at understanding context.

Hence, autoregressive transformers offer sequential generation and flexibility, while bidirectional models enhance contextual understanding but are limited in generating content. Choose based on the task's requirements.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP