Disentangled representations improve interpretability in Generative AI by isolating independent factors of variation, enabling better control, debugging, and understanding of how different features influence creative outputs.

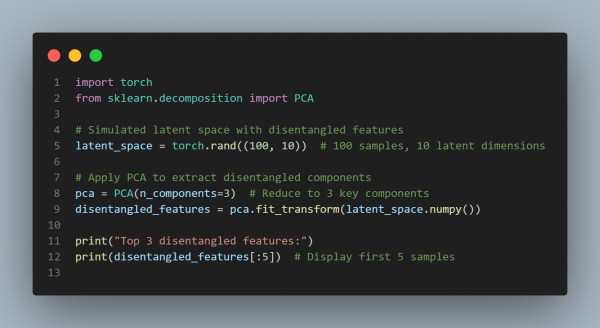

Here is the code snippet you can refer to:

In the above code, we are using the following kye points:

- Latent Space Simulation: Represents the abstract features learned by a generative model.

- Disentangling via PCA: Extracts independent factors of variation for analysis or control.

- Improved Control: Enables targeted manipulation of creative attributes like color, style, etc.

Hence, disentangled representations enhance interpretability in creative tasks by isolating and exposing independent features for analysis and control.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP