Inverse Reinforcement Learning (IRL) helps fine-tune generative AI models by learning optimal policies based on observed behavior rather than predefined reward functions. This approach can improve the quality, coherence, and alignment of outputs with human preferences, especially in tasks like content generation, recommendation systems, or dialogue systems.

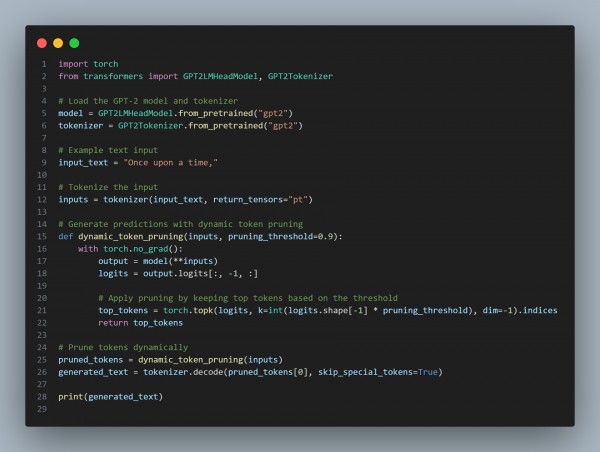

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Human-Aligned Outputs: IRL allows fine-tuning based on real-world preferences or feedback, improving output relevance.

- Flexible Reward Function: The reward function can be tailored to specific tasks or human feedback, guiding the model to generate more desirable outputs.

- Task-Specific Improvements: IRL is beneficial in applications like conversational agents, recommendation systems, or content generation, where outputs must align with subjective human goals.

Hence, these are the benefits of using inverse reinforcement learning in fine-tuning Generative AI outputs.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP