To improve sample diversity in a generative model, you can use strategies such as adding noise to the latent space and employing a more complex loss function.

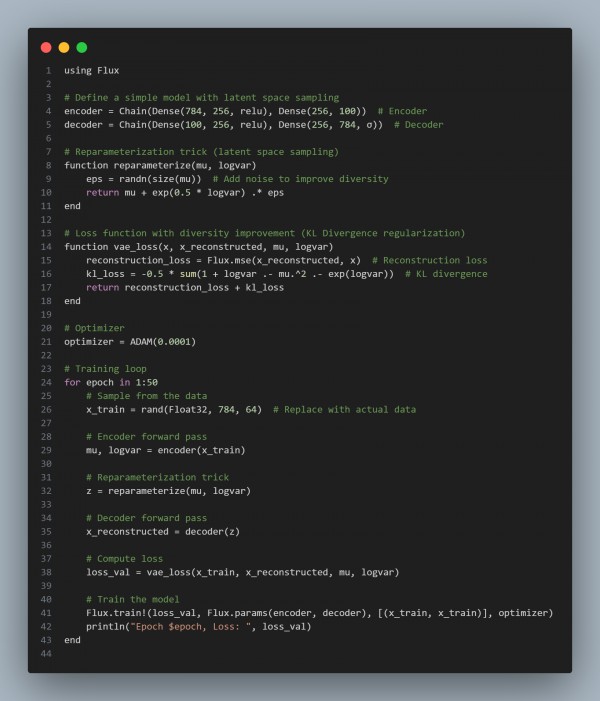

Here is the code snippet below showing how it is done:

In the above code, we use the following key points:

- Noise in Latent Space: Adding noise in the reparameterization step (reparameterize function) helps improve diversity by allowing more variation in generated samples.

- KL Divergence: Regularize the latent space using KL Divergence to promote more diverse representations and avoid mode collapse.

- Loss Function: Use a combination of reconstruction loss and regularization to improve the diversity of generated samples.

- Data Augmentation: If using images, apply augmentation techniques (e.g., rotations, scaling) to introduce more variability into the training data.

Hence, by referring to the above, you can improve sample diversity in a generative model

Related Post: Techniques for ensuring diverse sample generation in GANs and VAEs

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP