To address missing tokens in Hugging Face's language model predictions, adjust decoding settings like max_length, min_length, and no_repeat_ngram_size to improve output quality and completeness.

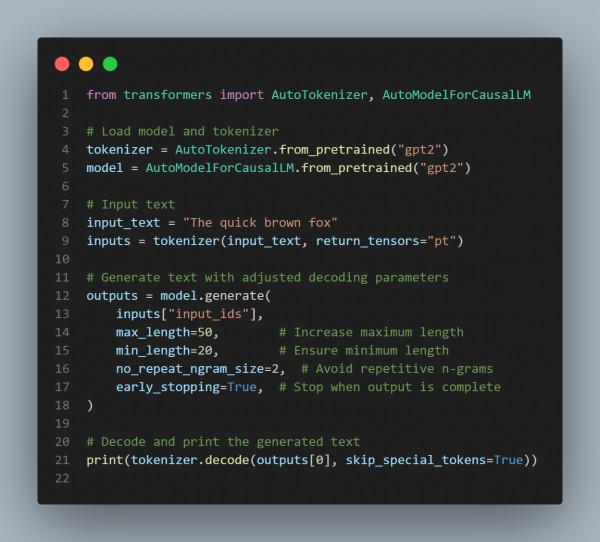

Here is the code reference you can refer to:

In the above code, we are using the following:

- Adjust max_length and min_length: Ensures generated text is neither too short nor incomplete.

- Set no_repeat_ngram_size: Prevents repetitive phrases that can disrupt meaningful predictions.

- Enable early_stopping: Stops generation when the model predicts a complete sentence.

Hence, by referring to the above, you can address the issue of missing tokens in Hugging Face s language model predictions

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP