To debug inconsistent outputs, you have to ensure reproducibility by setting random seeds, disabling dropout, and using evaluation mode properly.

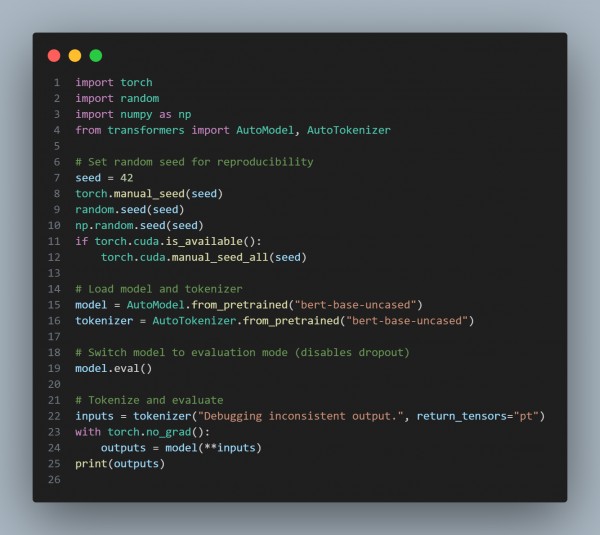

Here is the code reference showing how:

In the above code, we are using the following approaches:

- Set Random Seed: Ensures consistent behavior across runs by fixing randomness in Torch, NumPy, and Python.

- Evaluation Mode: Disables stochastic behaviors like dropout during model evaluation.

- Disable Gradients: Use torch.no_grad() to prevent gradient computation, which is unnecessary during evaluation.

Hence, by referring to the above, you can debug inconsistent output when using Hugging Face s model eval mode

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP