To build context-aware decoders for generative AI, you can use attention mechanisms to incorporate context into the decoding process. Transformers inherently achieve this with self-attention and encoder-decoder attention.

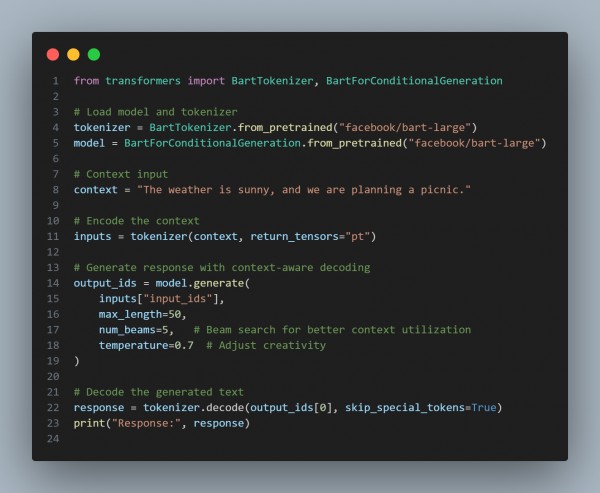

Here is the code snippet below which uses Hugging Face's transformers library with an encoder-decoder model (e.g., BART):

In the above code, we are using the following key techniques:

- Encoder-Decoder Models: Combine encoded context with decoder inputs.

- Attention Mechanisms: Ensure the decoder focuses on relevant parts of the context.

- Beam Search & Temperature: Improve response coherence and relevance.

Hence, by referring to the above, you can build context-aware decoders for generative AI applications.

Advance with Generative AI Certification! Learn cutting-edge AI techniques and get certified. Start Learning Now!

Advance your career in AI with specialized Agentic AI Training , focusing on building and deploying autonomous AI solutions.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP