Fine-tuning models using Low-Rank Adaptation (LoRA) involves freezing the pre-trained model weights and injecting trainable low-rank matrices into specific layers. This reduces memory usage and computational costs during fine-tuning.

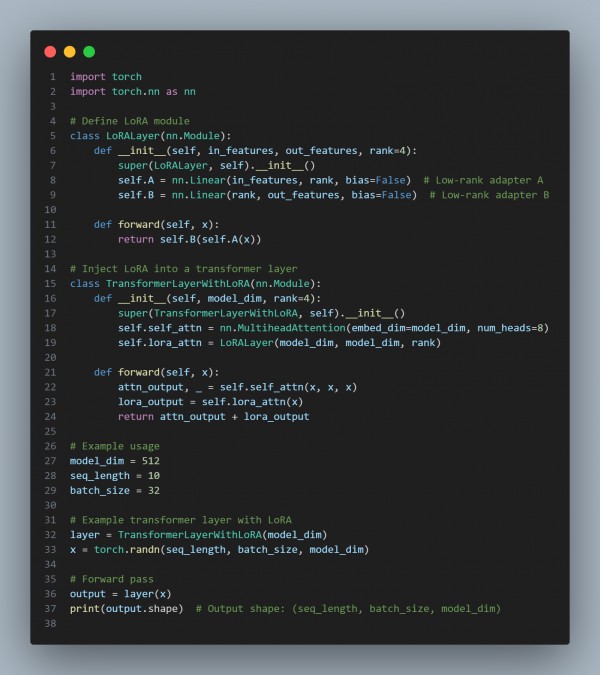

Here is the code snippet using PyTorch:

In the above code, we are using the following:

- LoRA Layers: Adds trainable low-rank adapters (A and B) to the model while freezing original weights.

- Integration: Inject LoRA into specific parts of the model, like attention layers.

- Fine-tuning: Only the low-rank parameters are optimized, reducing resource usage while preserving performance.

Hence, by referring to the above, you can fine-tune models using low-rank adaptation LoRA.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP