To use PyTorch's DataLoader with multiple workers for generative model training, you can set the num_workers parameter to parallelize data loading.

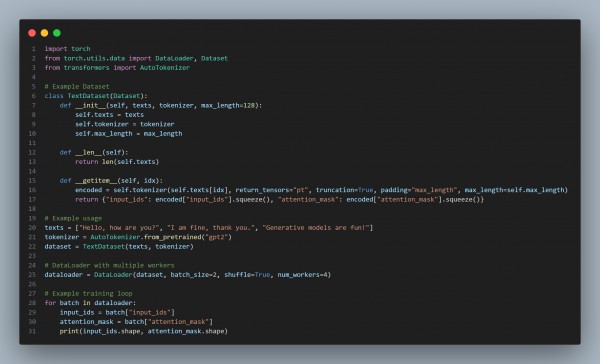

Here is the code reference you can refer to:

In the above code, we are using the following:

Key insight: num_workers=4 enables parallel data loading, improving training efficiency for large datasets. Adjust the value based on your system's CPU capabilities.

Hence, by referring to the above you can use PyTorch s DataLoader with multiple workers for generative model training.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP