Pix2Pix is a conditional GAN for image-to-image translation. You can refer to the following steps for the implementation:

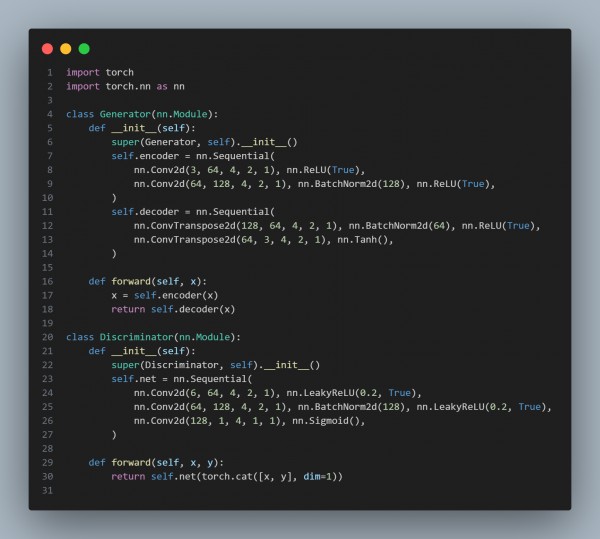

- Define the Generator and Discriminator

- The generator is a U-Net, and the discriminator is a PatchGAN.

- Training Loop

- Train using the adversarial and L1 loss.

- Inference

- Generate images after training.

Here is the code snippets showing the above steps:

Hence, this is a simplified Pix2Pix implementation. You can expand it with data loading, checkpointing, and advanced training techniques.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP