In order to host a Hugging Face model on Google Cloud AI for scalable deployment, you can follow the following steps:

- Export and Save the Model

- Use the transformers library to save the Hugging Face model locally.

- Package the Model

- Compress the saved model directory to upload it to Google Cloud.

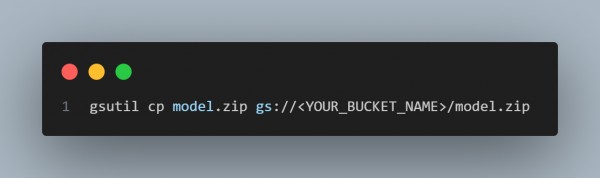

- Upload to Google Cloud Storage

- Upload the model archive to a Google Cloud Storage bucket.

- Write a Flask App to Serve the Model

- Create a Python script (app.py) to load and serve the model using Flask.

- Create a Dockerfile

- Package the Flask app in a Docker container.

Here is the code you can refer to:

Therefore, you may host a Hugging Face model on Google Cloud AI for scalable deployment by following the previous procedures and using the code snippets.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP