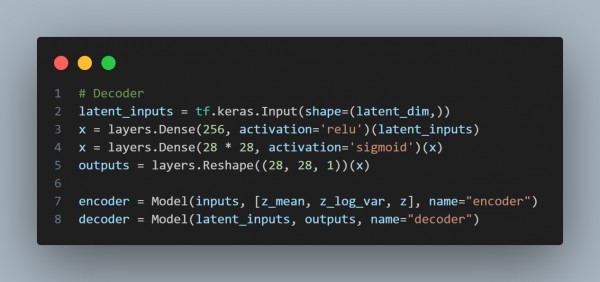

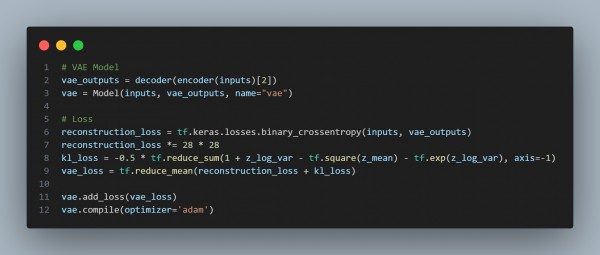

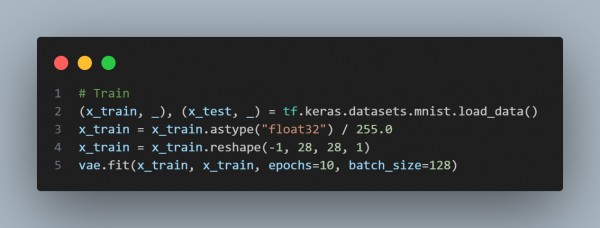

In order to write a code example for training a simple Variational Autoencoder VAE in TensorFlow, you can refer to the below code snippets:

In the above code, we are using Encoder, which Maps input to latent space with a mean (z_mean) and log variance (z_log_var), Reparameterization uses a custom layer to ensure differentiability, Decoder that reconstructs data from latent space and Loss, which combines reconstruction loss and KL divergence.

Hence, the code above trains a basic VAE on the MNIST dataset.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP