The challenges in implementing distributed rate limiting using Hazelcast are as follows:

Challenges:

- Consistency Issues: Ensuring consistent rate-limiting across nodes.

- Performance Overhead: High latency due to distributed locks or data replication.

- Fault Tolerance: Handling node failures without losing rate-limiting state.

Here are resolutions you can refer to:

- Use AtomicLong for counters with Hazelcast's distributed data structures.

- Apply bucket-based algorithms to reduce contention.

- Configure persistence or backups to handle node failures.

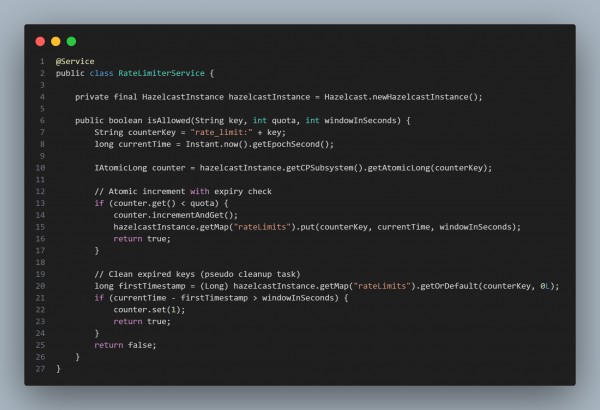

Here is the code you can refer to:

In the above code, we are using Hazelcast's IAtomicLong for atomic rate counters, Store request timestamps in a distributed map for cleanup, and Leverage Hazelcast's CP subsystem for consistency in partitioned environments.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP