Stochastic sampling introduces randomness, allowing for diverse and creative text outputs.

- Deterministic methods like greedy search or beam search produce predictable and often repetitive results.

- Stochastic methods like top-k or nucleus sampling balance randomness with control for more realistic generation.

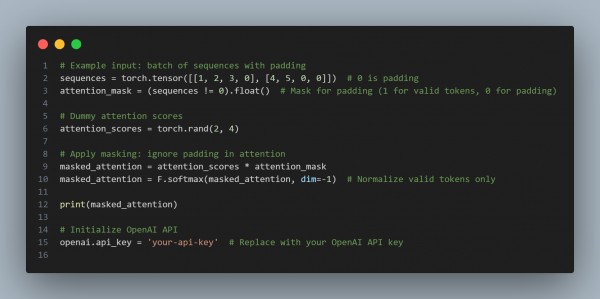

Here is the code snippet showing how it is done:

In the above code, we have used the following:

- Greedy Decoding:

- temperature=0: No randomness; the model selects the most probable word at each step.

- Result: Safe but predictable and sometimes repetitive.

- Stochastic Sampling:

- temperature=0.8: Adds controlled randomness for creativity.

- top_p=0.9: Implements nucleus sampling, focusing on a subset of most likely words (cumulative probability ≤ 0.9).

- Result: Diverse and realistic outputs, great for storytelling or creative tasks.

This demonstrates the trade-off between consistency (greedy) and creativity (stochastic).

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP