Attention mechanisms can be adapted for generative models with varying data granularity by using hierarchical attention or multi-scale attention, which processes data at different levels (e.g., sentence-level and word-level).

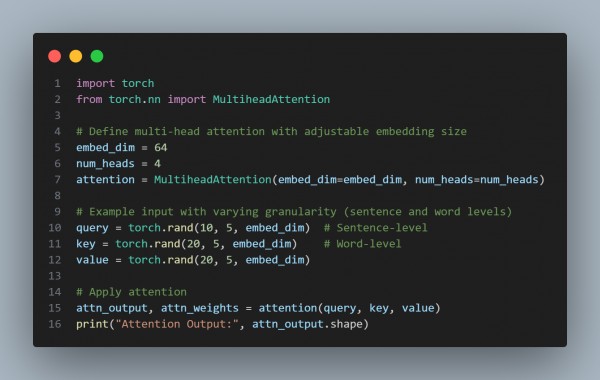

You can implement an attention mechanism for generative models with varying data granularity by referring to the following code snippet:

The above code allows the model to dynamically focus on fine-grained or coarse-grained information based on the task requirements.

Hence, referring to the above, you can implement an attention mechanism for generative models with varying data granularity.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP