You can enhance the interpretability of latent space in GAN by referring to the following:

- Latent Space Normalization: Normalize latent vectors to a consistent scale for better traversal behavior.

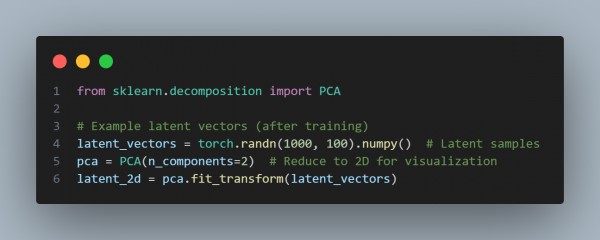

- PCA on Latent Space: Use PCA to reduce latent space dimensions, making meaningful directions interpretable.

- Latent Space Interpolation: Perform interpolation between latent vectors to visualize smooth transitions.

- Cluster Analysis: Cluster latent vectors to identify meaningful subspaces.

In the code above, we have used Normalization to ensure smooth generation behavior, PCA/Clustering to reveal interpretable dimensions or patterns, and Interpolation to aid in exploring latent transitions.

Hence, by using these techniques, you can enhance the interpretability of latent space in GAN models.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP